Hundreds of billions of dollars are being invested in AI compute clusters. Nvidia’s market cap is $3 trillion higher than it was at the launch of ChatGPT (equivalent to the entire GDP of the United Kingdom). Despite this, GenAI has not yet dramatically impacted productivity in the workforce. Investors and think tanks expect this shift to occur as cognitive load increasingly moves to systems with LLMs at their core.

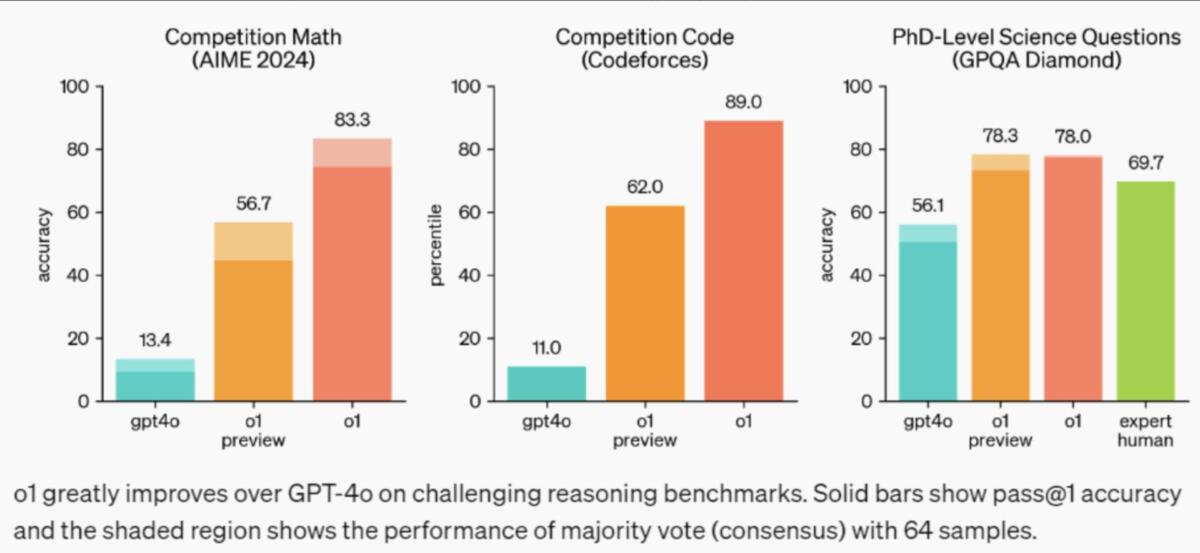

Frontier models continue to get smarter. The latest scaling paradigm involves transitioning models from pure System 1 Thinking to System 2 Thinking via Test-Time Compute. For particularly challenging problems, last month’s release of OpenAI’s o1-preview marks a major advancement in cognition.

Source: Open AI

One significant way these increasingly intelligent models will enhance productivity is by harnessing their capabilities through agentic frameworks.

What is an Agentic Framework?

Agentic frameworks are a family of software architectures that enable LLMs to operate as goal-oriented agents. They provide structure and tools for LLMs to:- Understand and decompose complex tasks

- Plan sequences of actions

- Execute those actions, often by calling external tools or APIs

- Evaluate results and adapt plans as needed

So why do we need agentic frameworks?

Consider this thought experiment: You have access to an army of 10,000 interns. They have strong general knowledge, are good problem solvers, and can write software at the method level, but each lacks any prior context on your individual problems. The caveat is that each intern, once activated, can only perform a single, narrow task. How would you organize them to achieve meaningful work?This situation is similar to what we face with large language models. They have short lives, need lots of context supplied, and are only capable of limited tasks on their own. Like interns, their output is not always reliable, and chaining them together is plagued with “game of telephone” issues.

To get LLMs to perform significant cognitive labor, we need to add some kind of harness around instances of them.

One of the challenges with LLMs is that they perform well when narrowly focused but tend to be less effective and forgetful when dealing with broader tasks. Like human activity, task decomposition leads to better outcomes.

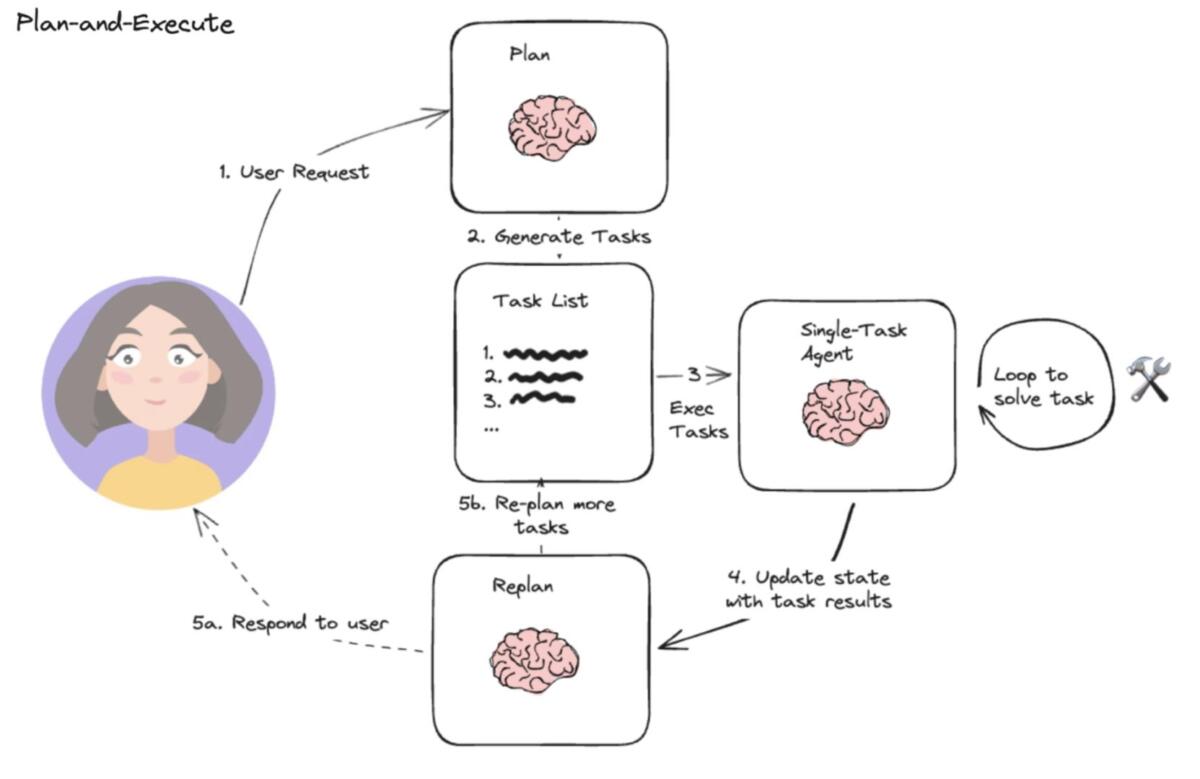

A Typical Plan-and-Execute Pattern in Agentic Frameworks

Source: LangChain

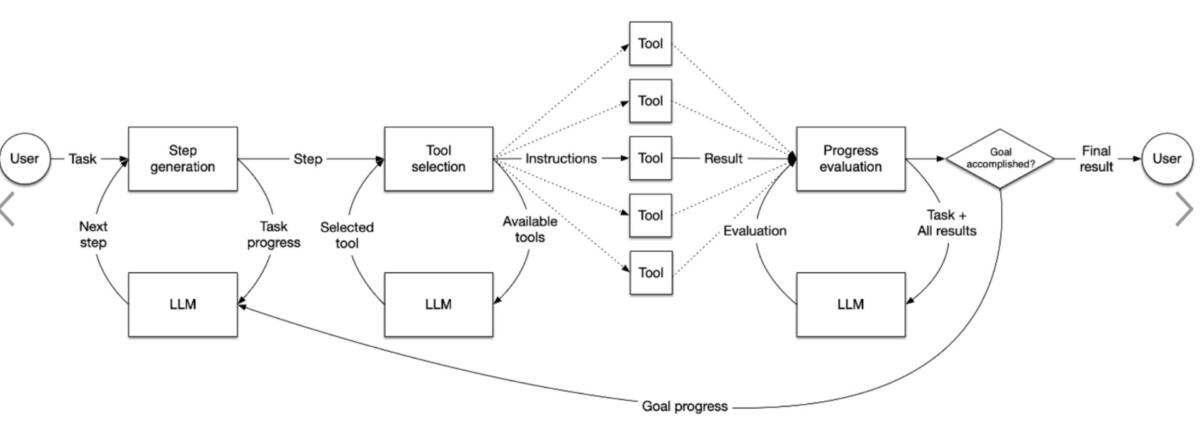

For agents to operate in the world, they need access to tools that allow them to retrieve information and take actions.

Source: LlamaIndex

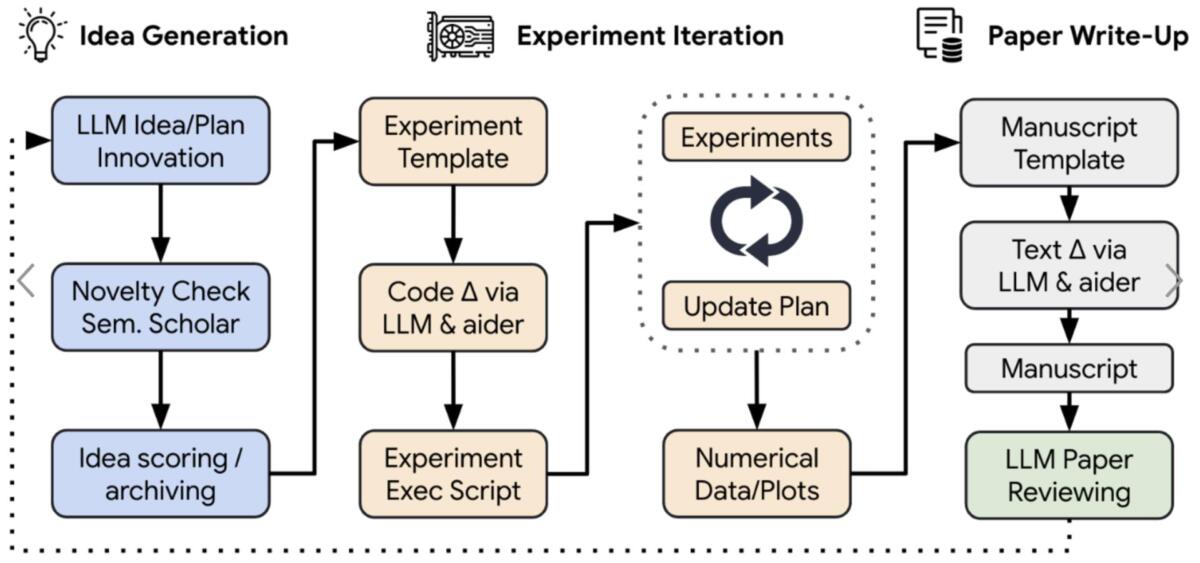

Agentic frameworks, in theory, allow arbitrarily complex orchestrations. Below is an example of a sophisticated implementation that performs basic research:

Source: The AI Scientist: Towards Fully Automated Open-Ended Scientific Discovery

The AI Scientist is a fully automated pipeline for end-to-end paper generation, enabled by recent advances in foundation models. Given a broad research direction starting from a simple initial codebase, such as an available open-source code base of prior research on GitHub, The AI Scientist can perform idea generation, literature search, experiment planning, experiment iterations, figure generation, manuscript writing, and reviewing to produce insightful papers. Furthermore, The AI Scientist can run in an open-ended loop, using its previous ideas and feedback to improve the next generation of ideas, thus emulating the human scientific community.

There are many agentic frameworks available, from early pioneers like BabyAGI, AutoGPT, AutoGen, Crew AI, AgentGPT and LangGraph.

Experimenting with LangGraph

At Silverchair, our team has aligned on LangGraph, which abstracts away much of the underlying complexity, such as coordination and control, state management, traceability, human-in-the-loop steering, and persistence, while still allowing low-level control. We favor LangGraph because it is the best suited for production agents.Replit Agent is one of the most advanced use cases of LangGraph and demonstrates how LLMs can work alongside humans to accomplish complex tasks.

As we familiarize ourselves with these tools, we’re simultaneously considering the most effective applications to solve real problems for our industry and our client community. As always, we’ll look forward to sharing more about those applications as they take shape, to support the ongoing education about and adoption of AI in scholarly publishing.

For more updates on Silverchair's approach to AI, or on our AI Lab, sign up for our newsletter.