A logical foundation

Theories and philosophies of mechanized human thought can be seen in Chinese, Indian, and Greek philosophers from the first millennium BCE. By the 17th century, many of the world’s thinkers experimented with the idea that all rational thought could be broken down into a system like algebra or geometry. Scientists like Gottfried Leibniz began to envision a system of symbolic logic, in which human thinking is a rational and universal process of structuring and manipulating patterns or symbols.From these early beginnings, we can start to see a logical framework to our ideas of artificial intelligence. These philosophies were limited by the inability to observe how human thought works on a neurological level, and by their conviction that there is a single, universal ideal for how thought works.

Clockwork robots

Automatons, in many ways, are the illusion of robotic artificial intelligence. In reality, they are self-operating (or mostly self-operating) mechanisms that follow specific sequences or perform simple tasks. Early automatons can be found as far back as Ancient Egypt, and by the 1200s, Al-Jazari appears to be one of the first to create human-like machines for practical purposes, such as offering soap and towels. Throughout the Renaissance and Enlightenment, these machines grew more complex: puppets and elaborate cuckoo clocks delighted crowds as machines, like those designed by Pierre Jaquet-Droz, drew pictures and wrote poems.In these early machines, we can see the ambition that continues to mark the study of artificial intelligence today. Though many of these puppets and automatons were pure entertainment, we also see some of the first examples of yielding certain tasks to the machines.

The ‘Turing’ point in the 1950s

By the 1950s, mathematicians were theorizing that mathematical reasoning can be formalized. Many voices added to the conversation, most notably Alan Turing. His thinking machine has inspired many subsequent generations of scientists. He also gives his name to the ‘Turing Test,’ which evaluates a machine’s ability to exhibit intelligent behavior that is indistinguishable from a human’s.As scientists began to turn their attention to the possibility of intelligent machines, in 1956, Dartmouth hosted a Summer Research Project on Artificial Intelligence. This workshop is widely considered the start of artificial intelligence research and focused on key topics: the rise of symbolic methods, early expert systems, and deductive vs inductive systems.

This period marked the formal start of the study of artificial intelligence, which led to the development of today’s technology. As we look at today’s AI ecosystem, it’s worth remembering the collaboration of this era and the unique opportunity for blue skies thinking.

Sci-Fi minus the fiction in the 1960s and 1970s

There was no shortage of funding for artificial intelligence research in the late 1950s and into the 1960s. As we saw advanced computers solving word problems and learning to speak English, scientists predicted a fully intelligent machine was no more than 20 years away. And while advances were made in neural networks, and even humanoid robots (like 1972’s WABOT-1 from Waseda University in Japan), scientists hit a wall by the mid-1970s. Ultimately, our computing power was just not advanced enough to achieve the promised results.This period of AI history was marked by amazing predictions, which had to be walked back when we began to realize the difficulty and complexity of what we were trying to achieve. We’re already seeing similar critiques with today’s technology: new tools released with massive fanfare, only to find new shortcomings as we look under the hood.

Finding focus in the 1980s and 1990s

After funding setbacks and public criticism, this era of AI persevered with projects focusing on more narrow AI applications. AI research also faced criticism from ethicists and other researchers who argued that human reasoning did not involve the ‘symbol processing’ theorized by Leibniz and others but was instead instinctive and unique to humans.Despite these critiques, the 1980s saw a rise in expert systems, which solve problems based on a specific domain of knowledge. Neural networks were also revived, with breakthroughs from physicists like John Hopfield in nonlinear networks.

The pendulum swung back and forth, with funding drying up in the late 1980s, and new movements such as Nouvelle AI, which argued that a machine needs a body with which it can perceive the world to have true intelligence.

We still see characteristics of this period in today’s AI ecosystem: ethical concerns and the rollercoaster of funding and public perception. One of the key takeaways that we can apply today from this era is the focus on application. If we consider AI as a tool to solve a problem, how would we approach AI research and development?

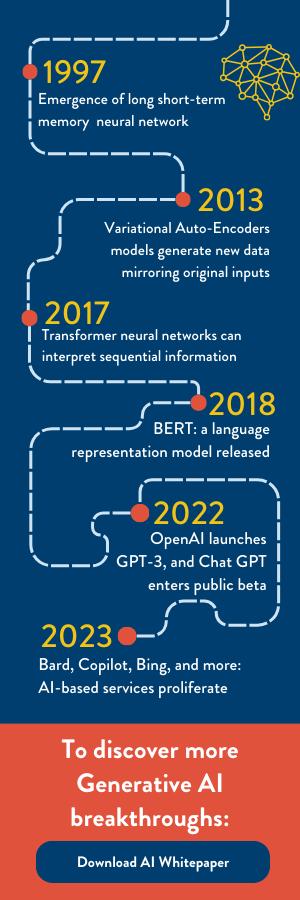

Deep learning + big data = AI everywhere

Today’s AI landscape has ballooned in size. From large language models (LLMs) to retrieval augmented generation (RAG), there’s a whole new vocabulary to learn as we navigate this technology. This era of AI is marked by advances in deep learning, which learns from unstructured data to mimic how the human brain processes information, and big data, which gathers massive amounts of data into sets that can be studied to reveal patterns and trends.While artificial intelligence is getting bigger, and ‘smarter,’ all the time, it is important to use this time to experiment with AI applications that solve specific challenges. We need to retain that focus of the 1980s without losing the optimism of the 1960s. In Silverchair’s AI Lab, we aim to strike the balance between a fearlessness to experiment and try new things, and a deep focus on the gaps in scholarly publishing infrastructure and delivery.

The study of artificial intelligence has always been a collaboration. We hope you’ll join us for our upcoming AI Lab Reports Webinar Series – subscribe to our newsletter to be the first to know when registration opens.